Accelerating autonomy development: Replicating commercial successes

How can the Air Force and other services accelerate autonomy development and deployment? By taking lessons learned from the commercial autonomy industry.

Welcome back to the Nexus Newsletter. In this edition, we highlight how synthetic data can accelerate the development of robust target recognition capabilities, invite you to a happy hour at AFA Air, Space & Cyber, share a recent article on autonomy development featured in DASN Ships “Disruptor” newsletter, and provide our takes on recent news from the nexus of autonomy and national security.

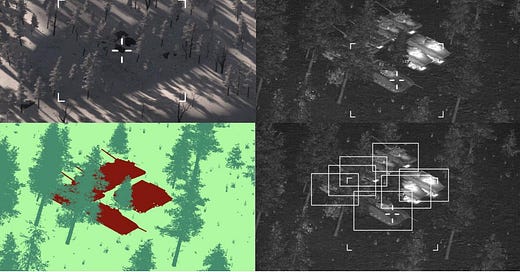

How synthetic data accelerates target recognition

A high-end fight with a near peer adversary will involve a rapidly-changing battlespace, a vast number of targets, and new, never-before-seen platforms. The conflict in Ukraine is evidence of that fact: a vast range of systems and platforms have played a role in the conflict, from WWII-era tanks and artillery to next-generation unmanned aerial, ground, and maritime systems.

The Air Force will be faced with numerous moving targets across the ground, aerial, and maritime domains, some of which will be known in advance, but many will likely be revealed during the conflict itself. Programs focusing on next-generation target recognition, sensing, and engagement are critical to this effort.

Perception systems lie at the heart of this challenge. Systems must be able to quickly scan the battlespace, identify friendly and adversary systems across domains, and share that information with human operators. This presents a significant challenge, but, thankfully, there is a roadmap to achieve this capability: Advanced driver-assistance systems (ADAS) and autonomous vehicles (AVs) operating at scale on roadways across the country already rely on perception systems to accurately detect, classify, and act on their surroundings.

For example, ADAS and AV perception systems must be prepared to detect and classify a vast range of objects, from traffic signs to rare obstacles like trash, wildlife, and other debris that could potentially cross a vehicle’s path. To train these perception models, ADAS and AV programs require significant amounts of diverse, accurately labeled data. These advanced commercial capabilities should be leveraged for Air Force use cases.

A robust real-world data collection, ingestion, management, triage, and labeling system is the first step to establishing a robust corpus of training data for the perception system. Even for more “routine” objects like traffic signs, however, large variations in appearance, illumination, weather, languages, and occlusions make it prohibitively expensive, time consuming, or impossible to collect real-world data that covers all possible scenarios.

The same challenges apply to perception systems deployed for military applications. Military perception systems must be able to quickly and consistently differentiate between friendly, adversary, and civilian systems within a battlespace, no matter the weather, lighting, and occlusions at play. And, when it comes to adversary systems, collecting real-world data to train a perception system is often out of the question.

Synthetic data is the key that helps ADAS and AV programs around the world accelerate the development of perception models robust enough for real-world production-level deployments.

By augmenting real-world data with high-fidelity, physically-accurate synthetic data, autonomy development teams are able to quickly expand the corpus of their training data, improve model performance on rare classes, and accelerate development velocity at minimal cost.

In fact, Applied’s perception simulation team recently conducted a case study that demonstrated how synthetic data can significantly reduce the need for labeled real-world data: A synthetic traffic sign database allowed the team to use 90% less real world data and still achieve 99.8% classification accuracy. With large variations in traffic sign appearance, illumination, weather, and occlusions, synthetic data presents an opportunity for autonomy development teams to accelerate perception model development and reduce costs, while still ensuring that resulting models are robust and performant.

Similarly, synthetic data is the key that will enable the Air Force to develop systems that are capable of identifying, classifying, and tracking numerous moving targets across aerial, ground, and maritime environments. While it is often impossible to collect adequate quantities of real-world data on adversary systems to train a target recognition algorithm, high-fidelity, physically accurate synthetic data with pixel-level annotations enables development teams to rapidly generate the training data needed to accurately and reliably identify targets in congested operating environments.

To compete in a high-end fight with a near-peer adversary, identifying and tracking vast numbers of adversary systems will prove critical to success. Programs focused on next-generation target recognition, sensing, and engagement should take learnings from perception systems deployed in the commercial autonomous vehicles space and leverage high-fidelity synthetic data to accelerate development velocity and reliability.

To learn more about Applied’s synthetic data solutions, contact our team.

Meet us at AFA Air, Space & Cyber

We’re heading to the Air Force Association’s Air, Space & Cyber conference next week! Stop by booth PBL7 to meet our team and learn how we’re accelerating aerial autonomy by bringing commercial best practices for autonomy development to the Air Force.

We’re also hosting a happy hour at McCormick & Schmick’s in National Harbor from 5-7:00PM on September 11th. Drinks and food will be provided - click the link below to join us!

Autonomy development and lessons from Silicon Valley

How can the Navy create a viable pathway to operating unmanned and autonomous systems in the next decade? In the latest edition of The Disruptor, a quarterly newsletter from Director of Unmanned Systems at DASN Ships Dorothy Engelhardt, our team argues that lessons learned from Silicon Valley may offer valuable clues.

Key takeaways:

Begin from first principles

Think use cases, not definitions

Autonomy is a continuum, not a binary

Getting the software right is critical

Incorporate testing and evaluation from the get-go

Buy rather than build software (when it makes sense)

Incentivize partnerships between traditional hardware vendors and autonomy software startups

News we’re reading

Autonomous systems are gaining momentum in the national security space. Below, we’ve pulled key quotes from recent articles of interest, plus brief commentary from Applied Intuition’s government team:

Breaking Defense | ‘Replicator’ revealed: Pentagon initiative to counter China with mass-produced autonomous systems

Key quote: Scaling, Hicks said, is the problem Replicator will most directly try to solve.

“We’ve looked at that innovation ecosystem [and] we think we’ve got some solutions in place… across many of those pain points, but the scaling piece is the one that still feels quite elusive — scaling for emerging technology,” she said during a Q&A portion of her presentation. “And that’s where we’re really going to go after with Replicator: How do we get those multiple thousands produced in the hands of warfighters in 18 to 24 months?

“I mean it’s not without risk; we’ve got take a big bet here, but what’s leadership without big bets and making something happen?”

Hicks said the Replicator name refers not only to the mass production of individual systems, but the push to replicate “how we will achieve” the mass production goal, “so we can scale whatever’s relevant in the future again and again and again.” It’s a culture change as much as a technological one, she said.

Our take: Leveraging platforms that are “small, smart, cheap, and many” via the Replicator initiative is exciting. The focus on replicating procedures and best practices that have been proven effective at scaling autonomy development beyond R&D and into large-scale production is particularly interesting. Robust development and testing infrastructure designed to accelerate program timelines, reduce costs, and improve outcomes will be critical, particularly if the objective is to “field attritable autonomous systems at the scale of multiple thousands, in multiple domains, within the next 18-to-24 months.” We’re looking forward to learning more about the development and testing infrastructure that will make that possible.

New York Times | A.I. Brings the Robot Wingman to Aerial Combat

Key quote: “But you can present potential adversaries with dilemmas — and one of those dilemmas is mass,” General Jobe said in an interview at the Pentagon, referring to the deployment of large numbers of drones against enemy forces. “You can bring mass to the battle space with potentially fewer people.”

The effort represents the beginning of a seismic shift in the way the Air Force buys some of its most important tools. After decades in which the Pentagon has focused on buying hardware built by traditional contractors like Lockheed Martin and Boeing, the emphasis is shifting to software that can enhance the capabilities of weapons systems, creating an opening for newer technology firms to grab pieces of the Pentagon’s vast procurement budget.

“Machines are actually drawing on the data and then creating their own outcomes,” said Brig. Gen. Dale White, the Pentagon official who has been in charge of the new acquisition program.

Our take: As we’ve said before, autonomy is fundamentally a software problem, not a hardware problem. As the Pentagon prioritizes fielding autonomous systems at speed and scale, it’s imperative that the software piece comes before the shiny new hardware. And, it’s important to understand the right order of operations when it comes to autonomy software: Supporting infrastructure and tooling for data collection and management and software development and testing is a necessary first step. With that baseline infrastructure in place, it remains imperative that autonomy program managers focus on the baseline autonomy functionality of the system before broadening the focus to the capabilities that lay on top of the stack that drives or flies the system at hand.

C4ISRNET | Defense Innovation Unit embed to connect INDOPACOM to commercial tech

Key quote: As the Pentagon’s liaison to commercial companies, the DIU official embedded in the directorate will play a key role in making sure the connection between technological needs and industry solutions is strong, DIU Director Doug Beck told reporters Aug. 29 on the sidelines of the conference.

“The person will be somebody who is that dual-fluency talent, who combines deep expertise in relevant technical areas from the commercial sector as well as doing it live at DIU in a leadership role for a while, working with concrete commercial solutions to DoD problems,” Beck said.

The leader, who will serve as the Joint Mission Accelerator Directorate’s deputy director as well as the CTO, will be joined by a team of DIU staff working throughout INDOPACOM, he added.

Our take: Putting the “DOD’s embassy in the Valley” in direct contact with end users in the COCOMS, and INDOPACOM specifically, is a positive development. DIU has proven themselves as effective connective tissue between the Department and the innovation engine of Silicon Valley, and we’re confident that deepening their connection with warfighters will increase their effectiveness and ability to communicate warfighter requirements back to nontraditional defense contractors.